When you analyse the profitability of a telematics solution, you need to take a thorough look at the operating costs. A significant factor is the amount of sensor data collected, sent over a wireless network, and stored. Here we share some of our thoughts on this issue.

Let’s take one step back.

Besides cost, the sheer volume of data resulting from IoT solutions is a global issue. There is a huge, constantly increasing number of connected devices able to produce a continuous data stream. If all IoT devices sent all their data unfiltered, then eventually, the available wireless bandwidth or even storage space would not be able to cope.

Edge Computing and intelligent devices provide the solution. If you filter the data in the telematics units or “sensor gateways” you will reduce the data amount sent over the mobile networks.

Local processing and filtering can get rid of irrelevant data so that only significant summaries or statistics go to the cloud for further use. This allows us to optimize the data volume, and keep the cost competitive.

A simple example: follow the position of a vehicle

Telematics devices can send data within very short time intervals. Every now and then, we meet customers who want an update on the position of each one of their vehicles once per second. It might look like a good idea, and technically, it’s possible.

But a one-second interval is not feasible in practice. In fact, any fixed interval for position data is suboptimal – you get either too much or too little information. A vehicle moving on a highway should send its position more frequently than a vehicle moving slowly. If the vehicle has stopped moving, there is no point in receiving the same position data over and over. Its last position before the stop is enough.

Many entry-level telematics devices can only send data with a fixed interval. We think that it makes sense to use more advanced logic to optimize the volume and quality of collected data.

Of course, how you do this depends on the application. Telemetry in Formula One (F1) racing is a good example of a very special set of requirements. F1 teams use extensive real-time telemetry data from their racing cars. It produces a massive amount of data, but there are only two cars per team, and the data is analysed in real time by advanced software and several race engineers. Should there be thousands of cars on the track continuously, the resulting huge amount of data would force them to do reduce the data volume.

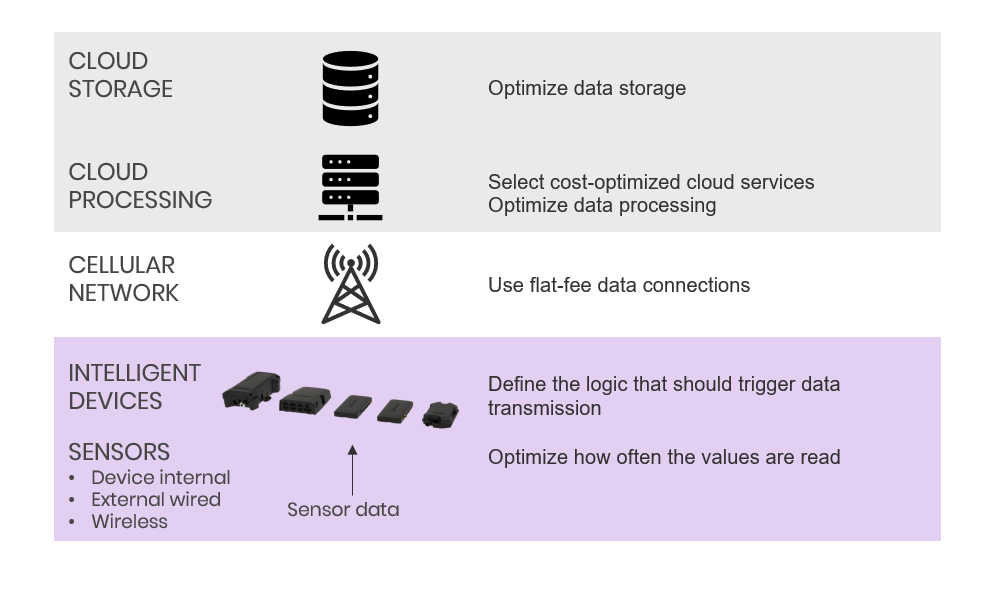

How to reduce data-related costs

Handling large amounts of data produces three main costs:

- Transmission – data transmission fees

- Processing – cloud service processing fees

- Storage – cloud storage fees

Data transmission is obviously the most significant of these. Less important, but often surprising, is the cost of data storage. An individual, private user can store small amounts of data in a commercial cloud for free. But when you require large, secure and well-managed data storage, it will cost.

You can reduce data-related costs by:

- using flat-fee data connections and careful monitoring of data usage limits

- optimising data storage (e.g., managed disk vs. cloud database vs. data lake) and storage retention policy – in other words, for how long the data is relevant, and for how long it should be stored

- selecting cost-optimized cloud services and optimising the software implementation for data processing

However, before optimising anything, you should carefully consider the data itself and plan ahead accordingly. Ask these questions about the data:

- Why are we collecting this data?

- How do we utilise the collected data in concrete terms?

- How do we store the data and maintain the database that holds it?

- Is all the data intended for collection really relevant?

- How often does the data change?

- What is the logic that should trigger data transmission?

Think also about the future. A piece of data might not be relevant now, but will you need it later? For example, artificial intelligence (e.g., anomaly detection) is based on analysing large data sets.

In some cases, you can even find alternative uses for your data: Cargotec: Keeping Cargo Flowing in Crisis Conditions.

Aplicom devices to the rescue

Aplicom telematics devices allow pre-processing of collected sensor data in an uncomplicated way. The Aplicom A-Series Telematics Software Configurator makes optimizing the volume of data easy, without any programming. And in case you need something more specialised, the device is fully programmable to run more complex application logic.

A good example how the A-Series Configurator can be used is setting up “speed zones” for sending GNSS position data:

First, you define a low-speed zone and a high-speed zone. The speed limit between the zones is configurable, so that the definition of “low speed” or “high speed” is up to you.

Now you can apply different sets of rules for each zone. You can define a time interval and other parameters for sending a position, as well as a range of actions that can be defined by logical rules. As a result, you collect only the data you want and optimise the volume.

Aplicom A-Series devices offer you a choice of action triggers: time, turning on power or ignition, movement detection on the accelerometer, and so on. Optimised application logic can, of course, be used for other purposes as well.

Whatever you decide to do, you should use a device platform that allows you to implement the application logic you require, either by programming or by configuration. Our team of specialists would be happy to work with you, sharing their expertise and long experience to co-create the optimal device solution for your needs.

Learn more about the powerful A-Series device configurator in the A-Series Configuration Guide or contact us for a free consultation.

More about cloud service pricing is provided by the leading cloud providers, for example:

Seuraa meitä sosiaalisessa mediassa